With an increasingly globalized world, the language barrier problem becomes more prominent. And thus, inhibits proper interaction between not only humans but also information interfaces in the respective country.

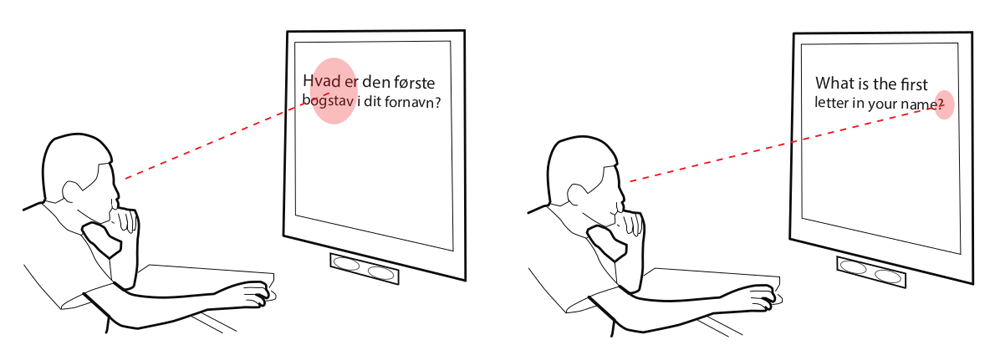

Navigating an interface in an unfamiliar language can be challenging and cumbersome. More often than not, poorly accessible language menu are of little to no help. Implicitly inferring a user’s language proficiency helps relieving customer frustration and boosts the user experience of the system. The following image shows a sketch of such a language-aware interface.

In our work, we look at gaze as an indicator of a user’s understanding. In conversations, we already use telltale facial expressions and body language to determine the interest of our conversation partner. In our recently published paper at the CHI 2017 conference titled Robust Gaze Features for Enabling Language Proficiency Awareness [1], we investigate the effectiveness of subtle changes in gaze properties to infer the user’s proficiency in the display language.

Literature suggests that text difficulty is a major factor how people are reading, such as reading smoothly when understanding a text versus re-reading parts that are not clear to the user. In a study with 21 participants, we tested this hypotheses by varying text difficulty via the displayed languages. Our database consisted of 15 simple questions in 13 different languages. We showed that certain gaze properties (fixations and blinks) are effected by varying the display language and that people tend to spend more time focusing on each letter when their language proficiency was low.

Detecting the need for assistance (the user has low language proficiency) can be done in less than three seconds, following up with a language selection menu. In our paper, we investigate several different approaches to ascertain a user’s language proficiency showing effects of different proficiency distributions and cost-sensitive classification.

In further studies, we plan to evaluate the feasibility of detecting a user’s proficiency in higher level concepts, such as different forms of graph visualizations, schematics or maps.

[1] Jakob Karolus, Paweł W. Woźniak, Lewis L. Chuang, and Albrecht Schmidt. 2017.

Robust Gaze Features for Enabling Language Proficiency Awareness.

In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17). ACM, New York, NY, USA, 2998-3010.

DOI: https://doi.org/10.1145/3025453.3025601