I’m delighted to share that I’ve successfully defended my Ph.D. thesis titled “Variational 3D Reconstruction of Non-Lambertian Scenes Using Light Fields”.

Depth estimation from multiple cameras is the task of estimating the distance between the individual cameras and the scene for each pixel of a view. The depth of a single scene point is estimated by finding this point in multiple camera views and triangulating the relative 3D coordinate. One of the main challenges is identifying correspondences across multiple views. This task is relatively straightforward for Lambertian materials, i.e. materials that exhibit a uniform reflection pattern and, thus, appear the same from different angles. Materials with reflective components – so-called Non-Lambertian materials – are more challenging as they appear differently from varying angles. This makes the reidentifaction of single points from different views more challenging.

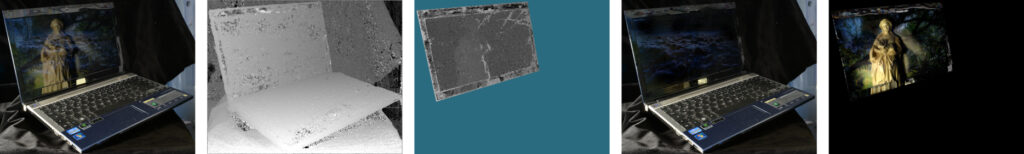

My Ph.D. was focused on depth estimation for multi-layered scenes. This property occurs in one of two cases. Either when looking through dirty or stained glass or in the case of textured high gloss materials like polished marble. In both cases, the observed color is the combination of two colors, e.g. the texture of the marble and the surrounding – reflected – scene. Here, standard matching algorithms fail at reconstructing a coherent surface as sometimes features belonging to the foreground are matched and sometimes features belonging to the background.

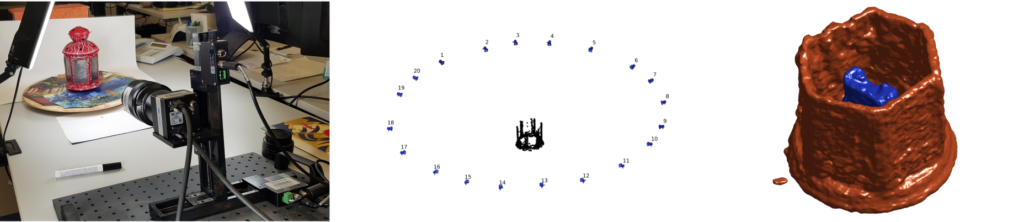

The use of light fields – a dense and regular collection of identical views from slightly different positions – gives rise to so-called epipolar plane images. These images allow transforming the task of matching between views into one of orientation analysis. Here, the orientation is proportional to the depth of a certain point in the scene. Thus, in the case of multiple layers at different depths, multiple orientations are present.

During my Ph.D. I solved several tasks for this kind of data: estimating masks for regions in which multiple layers occur, depth estimation for multiple layers as well as separating the luminosity of the individual layers. Additionally, I worked on relative pose estimation for multiple light field views which also led to an algorithm for consistent 3D reconstruction for complete scenes with multi-layered parts.

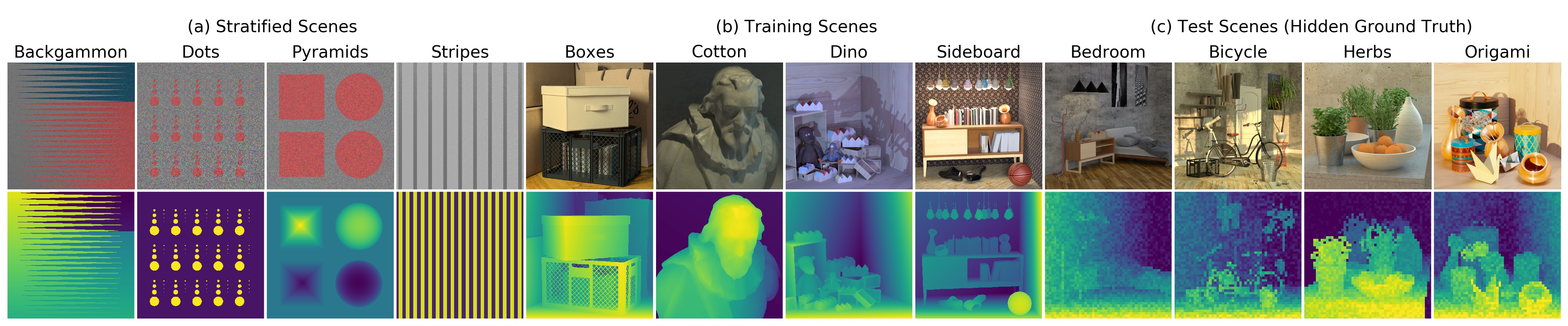

Another main topic of my Ph.D. was the benchmarking of algorithms that perform depth estimation from light fields. Together with Katrin Honauer from Heidelberg University, I developed and deployed the 4D light field benchmark – www.lightfield-analysis.net. This benchmark is the go-to place for evaluating depth estimates from light fields.

I’ve been very fortunate to work together with great collaborators and coauthors. Without them, this work would not have been possible. I would particularly like to thank Antonin, Anna, Katrin, and Urs. I’m also very grateful for the support of my advisors – Bastian Goldlücke and Oliver Deussen – and the DFG for funding this research within project B05 of the SFB-TRR 161.