Inferring the 3D shape of objects shown in images is usually an easy task for a human. To solve it, our visual system simultaneously exploits a variety of monocular depth cues, such as lighting, shading, the relative size of objects or perspective effects. Perceiving the real world with two eyes even allows us to take advantage of another valuable depth cue, the so called binocular parallax. Because of the slightly different viewing position, the images projected to the retinas of both eyes will be slightly different. While objects close to the observer undergo a large displacement between the images, objects that are far away exhibit a small displacement. Because nearly all this happens unconsciously, we usually do not realize how tough this problem really is.

When developing computer vision algorithms to solve this task, researchers came up with a variety of algorithms – most of them relying on similar depth cues as mentioned above. Two of the most prominent approaches are known as Stereo Reconstruction and Shape from Shading (SfS). Both are two fundamentally different strategies for image-based 3D reconstruction. While stereo is based on finding corresponding pixels in multiple views and determining the depth from the resulting parallax via triangulation, SfS estimates the depth solely from a reflection model that relates the image brightness to the local surface orientation – which, however, requires information on both the illumination and the reflectivity (albedo) of the scene.

In fact, the advantages and drawbacks of the two techniques are quite complementary. Stereo works well for textured objects, since prominent structures facilitate the matching process, while, at the same time, spatial smoothness assumptions are needed to fill-in estimates in homogeneous regions. In particular, in the presence of fine surface details, however, such assumptions pose a problem as they tend to oversmooth the result. In contrast, SfS can produce detailed reconstructions, since it hardly needs smoothness assumptions, however, serious problems are caused by textured regions or equivalently by non-constant albedo, since the decomposition of the observed brightness into albedo/colour and depth is ambiguous. SfS is hence not able to infer the depth uniquely which makes it difficult to apply in real-world scenarios.

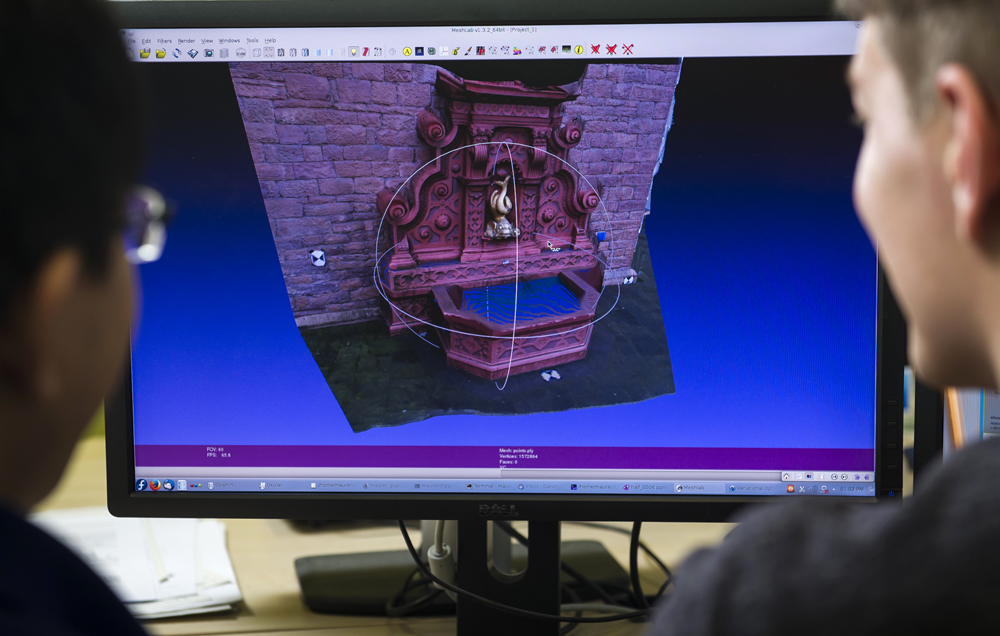

In our recent work presented at the British Machine Vision Conference (BMVC), we propose a novel method that combines the advantages of both strategies. By integrating recent stereo and SfS models into a single minimisation framework, we obtain an approach that exploits shading information to improve upon the reconstruction quality of pure stereo methods.

For more information read our paper:

D. Maurer, Y. C. Ju, M. Breuß, A. Bruhn: Combining Shape from Shading and Stereo: A Variational Approach for the Joint Estimation of Depth, Illumination and Albedo. In Proc. 27th British Machine Vision Conference, BMVC 2016, York, UK, September 2016 – R. Wilson, E. Hancock, W. Smith (Eds.), BMVA Press, Art. 76, 2016.

See also: Supplementary Material [60MB].

The combination of these two strategies can lead to whole new improved level of 3D creations. Great to see such a problem approach. Great work guys!